Reliability of sensitive data

Introduction and context

Within the Graduate Outcomes survey there are some questions that could be perceived by a respondent as sensitive in nature, and this kind of question in particular can be at risk of reduced data quality in responses as a result, for example through increased item non-response or the misreporting of answers. Many factors can influence the responses provided to potentially sensitive questions including the mode of completion, question wording, presence of third parties whilst completing a survey and assurances about privacy, confidentiality, or use of the data (Tourangeau and Yan, 2007; Ong and Weiss, 2000). For mode of completion, self-administration modes are generally found to increase respondents reporting potentially undesirable behaviours (Tourangeau and Smith, 1996; DeLeeuw, 2018). Confidentiality and privacy assurances have also been found to improve responses to sensitive questions. However, in some cases, these assurances can have the opposite effect, potentially as they bring data usage or privacy concerns to the forefront of a respondent’s mind who previously may not have considered it (Acquisti, Brandimarte and Lowenstein, 2015).

There are some questions in the Graduate Outcomes survey that could be viewed as more sensitive by respondents. Income is one such question, and the following section will provide some insight into research that has taken place around this question this year. In previous editions of this report, we have also completed investigations into other areas, such as the subjective wellbeing data (2nd edition of the Graduate Outcomes Survey Quality Report) and employer name and job title (3rd edition of the Graduate Outcomes Survey Quality Report).

Methods and results

The salary and currency questions

Income is commonly considered to be a sensitive topic for a survey question, and it often has higher levels of item non-response associated with it due to the intrusive nature of the question and concerns of disclosure (Tourangeau and Yan, 2007). Since Year 3 of the survey, salary has only been asked of respondents who selected that they are paid in a currency of ‘United Kingdom, Pounds, £’. This aided in reducing survey burden and reduced the collection of unnecessary data, but also meant that the order of the questions was switched. In previous years different strategies were used to attempt to increase question coverage, as having an optional currency question after salary was resulting in the collection of unusable data. It was therefore made compulsory to answer currency when salary was populated during the second year of the survey, which heightened the risk of survey drop-out, but ensured currency was provided. The new question order removes the need for a compulsory response in this block, and it was hoped this would reduce drop-out rates and ensure that respondents who provided a salary always have the corresponding currency information available.

As well as these changes, additional hover text was also added for a few questions, including salary, for cohort D of Year 3, in order to reassure graduates about the use of their data. Assessments last year indicated that the change in order and the addition of hover text had increased the response levels to either of the questions. However, it was difficult to determine the actual impact of these changes last year, and item non-response varies depending on the assessments made. Further detail on past assessments and changes made to the salary question can be found in previous versions of the Survey Quality Report. It was determined that there would be value in investigating item non-response again this year once further data had been collected and the years were more comparable (Table 1). Further to this, in Year 4 itself, further attempts have been made to improve response to the question, with a new system of calculation for typical salary ranges for full-time graduates and the removal of warning limits for part-time work to reduce the number of validation warnings and ensure they are as relevant as possible. Additionally, in order to improve response rates on the Computer Assisted Telephone Interviewing (CATI) mode following the findings in the 3rd edition of the quality report, an action plan to improve response rates was developed and subsequently implemented from cohort C. Equally, a pop-up was added for desktop completion from cohort D which informs graduates who are exiting the survey that the question is optional, in order to try and reduce overall drop-out from the survey as a whole.

Table 1: Table indicating item response rates for salary, split by completion mode, and including a base description of restrictions

|

|

Telephone (CATI) |

Desktop |

Mobile |

Base Description |

|||

|---|---|---|---|---|---|---|---|

|

|

Y3 |

Y4 |

Y3 |

Y4 |

Y3 |

Y4 |

|

|

Annual Pay |

78.7% |

82.7% |

89.6% |

87.7% |

90.3% |

90.9% |

Graduates who were in paid work for an employer or in self-employment/freelancing and have indicated that they receive their salary in UK £ in the previous question. |

|

Currency |

98.7% |

98.7% |

98.8% |

98.4% |

98.0% |

97.5% |

Graduates who were in paid work for an employer or in self-employment/freelancing, who answered the last mandatory question in the section they were routed down before being shown currency (routing may vary based on activity selections) |

Response to salary has increased from Year 3 to Year 4 overall, and indeed, rates have also risen for both if the most common forms of completion in the chart (CATI and the mobile element of online). This may indicate that the various steps that were put in place, such as the hover text being introduced towards the end of Year 3 and the action plan for the CATI element, have indeed had a positive impact on the response to the question. Whilst item non-response on CATI has lowered, it does unsurprisingly see lower levels of response to salary than the online modes. This is as expected, as self-administration modes are known to increase the likelihood of a graduate disclosing sensitive information (Brown et al., 2008), and we do see that more respondents provide this information on the online completion modes in Graduate Outcomes. Whilst response levels for the desktop element of online completion appear to have dropped slightly, this contributes lower levels of response to the question. The pop-up was introduced to try and combat these lower levels, which could be due to numerous factors. Further tracking and research will be required over the next year to determine whether the pop-up is having a beneficial effect or should be removed, and predominantly assessments of subsequent questions will aid in determining whether it is helping to reduce overall drop-out from the survey. Generally, questions about income often see much higher levels of item non-response (Tourangeau and Yan, 2007), and in Graduate Outcomes, item non-response is higher for this question than many others in the survey. However, when compared with other surveys and the rates of response you may expect for such a question, levels of response are good. Regardless, this question remains high priority for improvements and for ensuring the information collected is as useful as possible.

Distribution of responses received to salary

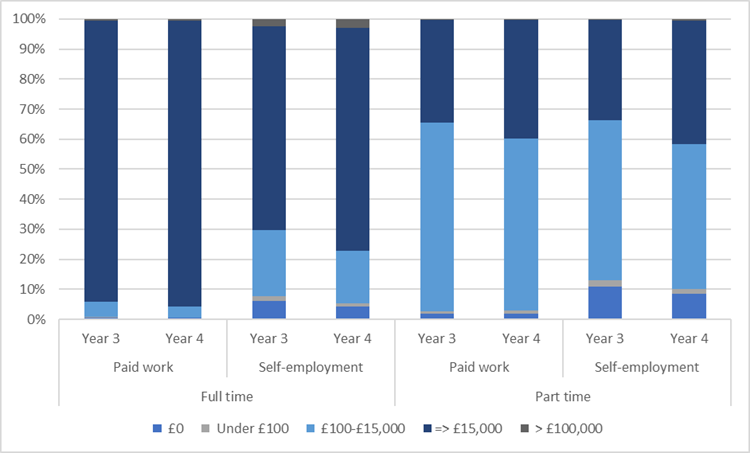

Whilst item non-response is useful, it is important to assess data quality in other ways. Another indication of data quality in relation to salary may be reductions in salaries outside the ‘expected’ range. Previous changes to the question aimed to reduce confusion that may have been causing some graduates to provide one-digit or two-digit salaries, but whilst this seemed effective some particularly low or high salaries remained. Though this cannot be avoided fully, and some may be genuine responses, it is likely that some of these responses are a result of graduates feeling reluctant to provide a genuine response to this question due to the sensitive nature of the question, perhaps leading to measurement error (Tourangeau and Yan, 2007). To aid in determining improvements in the online salary provision as a result of the addition of hover text, distributions of salaries provided in United Kingdom Pounds are split into broad salary groupings for quality analysis purposes and are shown in Figure 1.

Figure 1: Grouped salaries provided by graduates with a currency of UK £ in cohort D of year three and year two

Table 2: Proportion of "0" entries split by completion mode

|

Year |

Telephone (CATI) |

Desktop |

Mobile |

|---|---|---|---|

|

Year 3 |

1.5% |

0.9% |

0.6% |

|

Year 4 |

1.0% |

1.1% |

0.6% |

As a broad overview, an increase in provision of salaries of more than £15,000 to £100,000 may be useful in determining a potential improvement in quality for the full-time salary provision in particular. This increase can be seen across both employment types, not only for full-time, but also part-time graduates. Provision of salaries of £0 has decreased across the work types. Table 2 also investigates responses of “0” by completion mode, and selection has dropped across both of the most commonly utilised completion modes. This may be a positive sign that some graduates may have felt more comfortable providing a salary in year four. Furthermore, many of the groups also appear to have seen a reduction in graduates providing salaries for both the under £100 and £100-£15,000 groups. Whilst it must be noted that there are a number of factors that will influence these salary groupings it does seem to be a positive indication that provision of salaries may have improved.

Conclusions

The various interventions that have been put in place to improve response to the salary question seem to have had generally positive impacts on both the response levels to salary and the salaries being provided. Reassurances around the question do not appear to have had a negative impact, which was important to determine as confidentiality reassurances can have different impacts on respondents and can either increase divulgence or reduce it if its inclusion raises privacy concerns that weren’t present previously (Acquisti, Brandimarte and Loewenstein, 2015). However, this does not seem to be the case. Equally, interventions on the CATI completion mode appear to have had positive effects on response to salary, which is particularly important given the difficulty in achieving responses to sensitive questions on this mode in comparison to the online self-completion mode.

Next: Activity and employment assurance

References

Acquisti, A., Brandimarte, L. and Loewenstein, G., 2015. Privacy and human behavior in the age of information. Science, 347(6221), pp.509-514.

Brown, J. L., Vanable, P. A., & Eriksen, M. D. (2008). Computer-assisted self-interviews: a cost effectiveness analysis. Behavior research methods, 40(1), 1–7. https://doi.org/10.3758/brm.40.1.1

DeLeeuw, E.D., 2018, August. Mixed-mode: Past, present, and future. In Survey Research Methods (Vol. 12, No. 2, pp. 75-89).

Ong, A.D. and Weiss, D.J., 2000. The impact of anonymity on responses to sensitive questions 1. Journal of Applied Social Psychology, 30(8), pp.1691-1708.

Tourangeau, R., & Smith, T. W., 1996, Asking Sensitive Questions: The Impact of Data Collection Mode, Question Format, and Question Context. The Public Opinion Quarterly, 60(2), 275–304. http://www.jstor.org/stable/2749691

Tourangeau, R. and Yan, T., 2007. Sensitive questions in surveys. Psychological bulletin, 133(5), p.859.