Operational survey information

As the Graduate Outcomes survey is live, we’ve shared some useful information for providers which outlines how HESA is delivering the Graduate Outcomes survey.

The content will grow and change over time but to respond to provider queries, we will add to this information gradually, so it can be made available to you sooner. In addition, as we learn from each cohort, we may also change our approaches as outlined on these pages. We will endeavour to update this page as relevant.

How are IFF introducing themselves at the start of the interviews?

IFF interviewers introduce the Graduate Outcomes survey as the reason for the call and state they are calling on behalf of the provider for the particular graduate. If they are challenged further, they will explain that they are a research agency that have been appointed by HESA to carry out this work. If required, the interviewer can also advise that the survey has been commissioned by the UK higher education funding and regulatory bodies.

How do you ensure that where a graduate is in a different time zone, this is reflected in the time of call?

Where we have collected the data fields for international telephone numbers, the computer-assisted telephone interviewing (CATI) system automatically allocates the appropriate time zone to ensure the graduate is contacted at the correct time of day. The CATI system determines the location of the telephone number and assign it to the correct call queue. This is another reason for personal contact data needing to be as accurate as possible.

What does the telephone number come through as? Will it look like a spam call?

The contact centre operates using a geo-dialling system, whereby the geographical location of providers is taken into consideration. This use of familiar area codes serves to mitigate concerns about spam calling. This approach is supported more generally by existing best practice within the market research sector.

Despite the benefits of a geo-dialling system, the use of phone numbers that are visible but unknown to respondents does increase the likelihood that they will repeatedly ignore or even bar the calls, especially where they are called multiple times from the same number. Therefore, during the second half of year one (17/18), the approach was further enhanced by changing the telephone numbers used for some of the fieldwork period. This approach is being replicated in year two (18/19).

What happens when a graduate answers, but is unable to take the call?

In these instances, where we know the contact details are correct but the timing of the call is inconvenient, the interviewer will ask for the graduate’s preferred date and time to undertake the survey. The interviewer will log these details into the CATI system and the graduate then will not be made available to be called until the date and time specified. The graduate is also given the option of completing the survey online.

How does the CATI system react to a graduate switching modes (phone to online and vice versa)?

If a graduate is called and states that they would prefer not to take the survey over the telephone, the IFF interviewer asks whether they would be willing to complete the survey online instead. If they consent, the interviewer checks the email address with the graduate and closes the interview.

On closing the interview, the graduate will be automatically sent an invite to participate in the online survey, via the email address checked on the call. Where a graduate has already started the survey online, they will be withheld from telephone interviewing until such a point where it is deemed unlikely that they will go on to complete the survey.

At this point, the graduate will be made available in the CATI system for telephone interviewing. An IFF interviewer will call the graduate, and upon introducing the survey and gaining the consent to participate, will start the survey at the point the graduate reached in the online survey.

What’s the approach to quality assurance?

All interviews are recorded digitally to keep an accurate record of interviews. A minimum of 5% of each interviewers’ calls are reviewed in full by one of IFF’s Team Leaders. Quality control reviews are all documented using a series of scores. Should an interviewer have below acceptable scores, this will be discussed with them along with the issue raised, an action plan agreed and signed, and their work further quality controlled.

Team Leaders rigorously check for tone/technique, data quality and conduct around data protection and information security. In addition, where Oblong are unable to code verbatim responses, these will be returned to IFF who will take steps to obtain and supply better quality verbatim by listening back to the interview and where necessary calling the graduate again.

How opt outs are handled?

When a graduate informs that they wish to opt-out on the call, then the IFF call handler will action this on the system so they will be set to not be contacted by any form of communication.

Where are IFF’s interviewers based?

IFF operates a CATI centre in their offices in London, which is supported by interviewers working remotely within the United Kingdom. These remote workers access the same system and interface as London operators and they are treated no differently to interviewers based in the London office. Each machine used by home workers is under the complete control of IFF’s IT department.

What’s the approach to training IFF’s interviewers?

Interviewers receive full training covering practical, theoretical and technical aspects of the job requirements. For quality control purposes, Team Leaders provide ongoing support throughout, harnessing interviewer skills and coaching around areas for improvement. This is done through top-up sessions, structured de-briefs and shorter knowledge sharing initiatives about “what works”.

For Graduate Outcomes, all interviewers receive a detailed briefing upon commencing interviewing, covering the purpose of the survey, data requirements (for example level of detail needed at SIC and SOC questions), running through each survey question, and pointing out areas of potential difficulty so objections and questions can be handled appropriately and sensitively.

To aid providers’ communication with graduates, we’ve outlined the general engagement plan below that will be implemented for each cohort. See more information on survey timings (course end dates, contact periods and census weeks).

Our full engagement strategy is an internal only document and incorporates an intricate plan which includes a range of methods, timings and scenarios. This has been crafted using best practice data collection and research methods. We have also worked with experts from the ONS, Confirmit (and liaised with the Graduate Outcomes steering group) to create a robust strategy which carefully balances the need to gain responses using a blend of methods across all of the contact details we hold for each graduate.

The strategy will be reviewed over time and we will make changes and refinements where needed.

Engagement methods

|

|

|

|

| The primary engagement method will be email - we will also send email reminders at regular intervals. | Graduates will receive text messages (SMS) from ‘GradOutcome’ which also contain links to the online survey (where we have mobile numbers). |

Graduates will also receive phone calls from IFF Research (our contact centre) on behalf of providers. Note - in year four (C20071), non-EU international graduates will not receive phone calls regarding the survey. Find out more about this decision. |

Timetable

The engagement plan for the fieldwork period can be found below. See more on response rates.

| Week | Milestone |

|---|---|

| Week 0/1 | Pre-notification emails sent to all graduates with approved contact details |

|

Week 1/2 |

|

| Week 2/3/4 |

|

| Week 4/5/6 |

|

| Week 7/8/9 |

|

| Week 9/10/11 |

|

| Week 12 |

|

| Week 13 |

|

| Week 14 |

|

Communications

You can view the sample email and SMS messages being sent to graduates in survey materials below.

Encouraging response rates

Administration of invitations and reminders is carefully managed and considers timing, frequency, volume and journey of respondents. Every successful cycle of reminders informs the delivery of future reminders. All graduates across the entire sector are treated equally in terms of the communications they receive from us.

Partials to complete

Encouraging the graduates who have started the survey to finish it is a key part of our engagement strategy. To share more about our approach, Neha (our Head of Research and Insight) has shared some key aspects of our engagement strategy.

Read 'from partial to complete' blog by Neha Agarwal

Focusing on the mandatory questions

The majority of the mandatory Graduate Outcomes questions are the same that were required for a valid response in DLHE. Maintaining a set of mandatory questions ensures we have the correct routing in place and the required data for SIC/SOC coding purposes.

To ensure we receive maximum response rates, we have taken a number of steps to ensure each graduate is encouraged to complete these mandatory questions as a priority:

- non-mandatory questions are optional and can be skipped

- the subjective wellbeing and graduate voice questions are placed at the end of the survey, after the mandatory questions have been completed

- opt-in question banks are also placed at the end of the survey, after the mandatory questions have been completed.

How are contact details prioritised for surveying?

We’ve provided some guidance in relation to the number of contact details required by provider and how they are returned to us. This is all detailed in the Graduate Outcomes Contact Details coding manual. We've summarised it here:

EMAIL: We send the email invitations and reminders to EMAIL01 first, followed by EMAIL02-10. It is recommended that the ‘best’ email addresses are returned for a graduate.

UKMOB / UKTEL / INTTEL: It is recommended that the ‘best’ telephone numbers for a graduate are returned for UKMOB / UKTEL / INTTEL. Graduates will be called on their first telephone number before subsequent numbers are tried, and once successful contact is made, this contact detail is prioritised for any future calls.

Mobile numbers are more likely to result in successful contact and therefore, UKMOB (mobile) numbers are called before UKTEL (landlines), followed by INTTEL (international) numbers, where applicable.

Can you explain how to determine the ‘best’ contact details?

What we mean by ‘best’ is the supply of contact details that are most likely to elicit a response to the survey. This can be determined by recent contact with the graduate via this contact detail.

Some providers will be using email clients (e.g. mailchimp, raisers edge) to despatch their communications to graduates which often provide rich insight into the behaviour of the email recipient. This includes where the recipient has opened the email, clicked on a link or replied to it. This provides the evidence required to determine that the graduate is actively using this contact detail and is therefore useful in terms of survey engagement. If the provider is using these systems to carry out the suggested graduate contact during the 15 months post-course completion period, this provides a regular feed of information about this contact detail to help make this determination.

Where providers do not have access to this information, and multiple email addresses for a graduate are known, then provided these remain accurate for the graduate, it is recommended these are all returned to give HESA additional opportunities to contact the graduate.

Click here to view the definition of 'best'.

Can you share the rationale for the pre-notification strategy?

We use a system called MailJet to help us with the issues related to internet service providers (e.g. Gmail, Office 365) blocking our email invitations. As part of this, in cohort D (17/18) we trialled new activity that aimed to improve our response rates by sending a pre-notification email (a warm up) to all graduates in that upcoming cohort. As we saw a positive return on email invitations, we determined that we will continue with the pre-notification strategy for future cohorts / collections.

Can you provide more detail about the subjective wellbeing survey questions, including how you ask graduates and how the data will be used?

You can read more about this in our blog - 'Asking graduates how they feel' by Neha Agarwal, Head of Research & Insight.

Can you share more detail about what each of the statuses in the provider portal progress bar mean?

The provider portal user guide explains what each status means and who's included within each one.

Are providers allowed to contact graduates within a cohort (once it has opened for surveying)? (Updated October 2019)

We have previously supplied guidance that once a cohort has commenced surveying, providers should not be making direct contact with graduates for risk of over-communication, creation of potential bias and the fact that we cannot report on those who’ve chosen to opt out (providers should be respecting their wishes).

We have been exploring this in more detail within our response rate strategy work and have determined that there is considerable risk of bias and more importantly, HESA will not be able to measure and/or control it. This outweighs the possible benefits of getting some engagement from a hard to reach group. Therefore, the guidance above remains our position and we will not be pursuing the reporting of opt-outs.

What providers should not do:

- Under no circumstances should providers make direct contact (for example by email or phone) with graduates currently being surveyed.

What providers can do:

- Focus on brand recognition communications using non-direct channels such as provider websites and social media platforms including Linkedin and Twitter. We have provided a suite of communications materials for this purpose.

- If you’re sending a regular email campaign with the primary purpose of sharing a general update, for example your Alumni newsletter sent to a mass audience, you could include a small feature about Graduate Outcomes. Just make sure the content included about Graduate Outcomes is low in prominence – it should not be more than 50% focused on Graduate Outcomes.

Providers play a vital part in the success of Graduate Outcomes. It’s important that providers are engaging with the cohort population directly in the build up to the contact period commencing as well as other key points in the student to graduate lifecycle. So, whilst contact mid-cohort is not advised, there are plenty of other opportunities to build awareness of the survey. Find out more.

Can you share survey paradata or response rates with providers?

In the creation of a centralised survey, our strategy does not include individualised reporting of this kind, so we cannot share survey paradata (data about the process by which the data was collected) at a provider level. This means that our approaches to making any developments to the survey design or engagement strategy will be carried out across the entire survey by reviewing the entire data set.

We will also not be looking to share any overall sector statistics as any release would require considerable contextual information and we are unable to prioritise the required activity at this time. We want providers to rest assured that this analysis and activity is taking place but in a measured and controlled manner.

How are you encouraging graduates who've started the survey but not yet completed to finish the survey?

This is a central part of our engagement strategy. Broadly speaking, graduates in the ‘started survey’ group will receive regular email and SMS reminders encouraging them to finish the survey. In addition, they will be allowed appropriate time to complete it online before they are followed up via telephone. Read more about how we aim to turn partials into completes or view the engagement plan.

How many times will a graduate be contacted to complete the survey?

It is impossible to provide a simple answer to this question as it depends on what type of engagement we have with each graduate across the cohort and across modes. If you review the outline engagement plan, it shows the proposed contacts we will make with the online survey (via email and SMS message) and calls are carefully scheduled to complement this. Before a call is made, we will ensure that where a graduate has already started the survey online, that they have had time to complete it on this mode. It then depends how successful the contact details are and whether any contact is made using them (i.e. do they pick up).

You can view our engagement statistics e.g. average number of emails / text messages sent, within the end of cohort reviews on the supplied infographic.

Can you share your stance on provider incentives?

We have taken feedback from the sector and assessed the internal requirements to implement incentives versus the potential impact on response rates. Due to this, and the other initiatives we have deemed higher priority, work to roll out incentives to increase online responses has been deprioritised.

Despite this, we strongly recommend that providers do not create their own incentives. Depending on the type of population breakdowns a provider may have, incentives could bias the results in favour of that population group. For a centrally run survey like Graduate Outcomes, incentives must be rolled out across the entire population so that all respondents are treated equally.

When will you permit providers to include their own questions in the survey?

In June 2018, we took the decision to postpone the inclusion of the provider questions in the survey due to the legal, financial and operational complexities which surround these and to allow providers to manage the potential demand for these. Many of these complexities still exist and, above and beyond normal operational activity, we are currently focusing internal resource on improving email invitation deliverability, continuous survey improvement and strategies to boost online response rates. We have therefore decided to postpone provider questions for another collection.

We have prepared some frequently asked questions about response rates. We will add to these over the coming months. View the response rate targets.

Response rates

Why has HESA not hit all of the response rate targets?

To reflect on this, we must look at the landscape in which this collection existed. Not only have we been impacted by known issues such as the unknown brand; a culture of data sensitivity with the introduction of GDPR (making the collection and use of personal data more challenging than ever before); we have also increased the gap between graduation and data collection to 15 months (impacting quality of contact details). In addition, people are generally over-surveyed these days making it harder to tackle survey-fatigue.

The quality of contact details prevented us from making contact with some graduates and, even where we have high quality details, email providers such as Microsoft and Gmail have developed sophisticated ways to block email delivery to protect their users from unwanted or unexpected emails. The survey suffered from the latter of these issues in the first half of the year, but things improved significantly during the second half. If the technological improvements we implemented later were available from the start, we would have been able to close the gap between the response rate and the targets even further.

It is also worth noting that prior to starting Graduate Outcomes we did not have a baseline to set realistic targets for this brand new survey. The assumptions around online completion rate and impact of the shift from a provider-led to a centralised survey with a 15-month gap were not based on a prototype. Outputs form the first year of Graduate Outcomes should inform the discussion on targets moving forward.

While response rates are an indicator of data quality, they are not the only indicator. Having a responding sample that is representative of the population in terms of characteristics is more important. This has been our main objective in Graduate Outcomes – a high response rate from a representative sample.

What does the lower response rate mean for data quality of outputs?

Response rates are one of many indicators of data quality. They are not necessarily predictive of non-response bias. That is to say that even with low response rates, survey data may be representative of the population. The impact of non-response is what we aim to alleviate as far as possible through case prioritisation and weighting of the responses we achieve, so that outputs can be more representative of the population.

How will HESA be using weightings in the final data?

Following an assessment by HESA researchers working in consultation with the Office for National Statistics (ONS), the decision was made not to apply weighting to data and statistics from the 2017/18 Graduate Outcomes survey. Find out more in the Graduate Outcomes dissemination policy or our more detailed technical paper.

Are you planning to make any changes to the targets for the next collection?

The first year of Graduate Outcomes has provided a helpful baseline for the survey. Given some of the data collection issues we experienced early on, we hope to iron them out in the second year and get a revised baseline. We may revisit the targets at that stage and discuss them with our Steering Group.

My provider’s response rates do not reflect the overall response rates for the collection – how will this impact my final data?

The quality of the data at provider level cannot be determined by comparing the provider response rate with the overall response rate. Even with low response rates, survey data may be representative of the population.

All graduates at all providers have received the same level of engagement. There can be multiple reasons why a provider’s response rates are lower than others in the sector. Some of the factors responsible for high or low response rates are quality of contact details, prior awareness of Graduate Outcomes among graduates and a general preference towards participating in surveys.

How many times did you contact my graduates? How do I know you contacted them enough?

On average, it took 5 calls to achieve a completed survey response for year one (17/18). The number of calls made to non-respondents was a lot higher. More detailed statistics are made available in the end of cohort reports.

The amount of contact depends on what type of engagement we have with each graduate across the cohort and across modes. The outline engagement plan shows the proposed contacts we will make via email and SMS message and calls are carefully scheduled to complement this. Before a call is made, we will ensure that where a graduate has already started the survey online, that they have had time to complete it on this mode. It then depends how successful the contact details are and whether any contact is made using them (i.e. do they pick up). Successful contact with graduates heavily relies on the quality of contact details. Invalid and inactive email addresses and phone numbers will inevitably result in no contact.

Did you stop making contact with the target groups once you hit the target?

No, we carried on contacting people even if the main targets had been achieved. This is because we aim to get a representative sample of respondents whose demographic characteristics are similar to the population. As these characteristics are not mutually exclusive, we cannot make survey decisions based on just one target group.

Did HESA take valid answers from third parties (i.e. the graduate's parents, spouse, etc) as part of the approach?

Yes, data from a third party were collected for all providers except English FECs. This was introduced in the later stages of a cohort to allow sufficient amount of contact with the primary respondents; as data from third party is likely to be of a lower quality. The volume of third-party data has been limited to no more than 10% of a provider’s total responses (if they had at least 10 graduates in the population; if not, the provider was excluded from third party data collection). Subjective questions such as salary, graduate voice and wellbeing are excluded from interviews with third parties.

Are you including partial responses in the overall response rate?

Partial responses (that did not meet the minimum criteria but contain sufficient information required for key statistical outputs) will be included in the final data deliveries to providers and statutory customers and published outputs. They are not included in the overall response rates. These will only be based on completed surveys that meet the criteria for a minimum response.

What can I do to improve my response rate?

There are many ways for a provider to improve their response rates and they are focused around two key themes:

Increase brand awareness - Providers play a vital part in the success of Graduate Outcomes. It’s important that providers are engaging with the cohort population directly in the build up to the contact period commencing. We know from our work looking at response rates, that engagement with the online survey is dependent on recognition of the Graduate Outcomes brand. Providers play a key role in legitimising the online survey via their own direct and trusted engagement routes.

To support providers, we’ve created a contact plan to follow and we have supplied a whole range of communications resources in the Graduate Outcomes brand that can be used and personalised by a provider. We are also collating provider case studies to share ideas where tactics have worked and could be replicated.

Improve graduate contact details – HESA can only engage with gradates where contact details are accurate and up to date. This relies on a holistic approach step to this process which includes the collection and maintenance of contact details and submission. We have provided lots of information on how to approach this using the links provided. Additional support material can be found in the Contact Details coding manual, in particular, the following pages:

- Quality rules - help providers to identify duplicated data, shared data and non-permitted data

- Additional notes detail in data items including UKMOB, UKTEL, INTTEL and EMAIL help providers ensure the data submitted is in the format required

- Contact details guidance for providers - outlines what HESA classifies as accurate personal contact details.

Will you be looking to change your guidance to allow providers to make contact with graduates being surveyed to help increase the number of responses?

No, we will not be reviewing this guidance. Under no circumstances should providers make direct contact (for example by email or phone) with graduates currently being surveyed. This is to prevent the survey results being biased towards one or more groups of graduates which in turn reduces the precision of survey estimates. View our operational FAQs.

When will you be introducing incentives to increase the response rate?

We have taken feedback from the sector and assessed the internal requirements to implement incentives versus the potential impact on response rates. Due to this, and the other initiatives we have deemed higher priority, work to roll out incentives to increase online responses has been deprioritised. Under no circumstances must providers create incentives for their own graduates. View our operational FAQs.

In discussion with the Graduate Outcomes steering group, HESA has set the following response rate targets for Graduate Outcomes:

| Target group | Response rate target |

|---|---|

| UK domiciled full-time | 60% |

| UK domiciled part-time | 60% |

| Research funded | 65% |

| EU domiciled | 45% |

| Non-EU domiciled | 20% |

The above targets are applicable at a national, provider, undergraduate and postgraduate level. In addition to these, we will also monitor response rates for a range of socio-demographic and course characteristics such as age, gender, ethnicity and course.

We understand the importance of good quality outputs for the sector as well as other users of our data. We will continuously monitor the progress of Graduate Outcomes against the targets set out above. We will employ robust research and statistical methodologies to ensure we are able to produce estimates that meet the required statistical quality standard as well as our users’ requirements.

To enable providers to support the survey, final versions of the survey materials and communications with graduates can be viewed below.

Emails

There are a number of emails in the engagement strategy:

We carry out the pre-notification strategy for all cohorts in a collection. This is sent to all graduates prior to the start of the contact period to provide key information about the survey and lets them know that they’ll receive their unique survey link very soon.

Pre-notification email - English

Pre-notification email - Welsh

Pre-notification email - international graduates

The email invitations sent within the contact period are derived from the core email text which is shared below. The other iterations (reminders) vary depending on the nature of the email and its role in the engagement strategy. Changes are mainly limited to the subject line and first paragraph.

Email - international graduates

Survey questions

You can view the survey questions on the page below split by type. The data items and routing diagram can be found in the Graduate Outcomes Survey Results coding manual.

Data classification (SIC / SOC)

Since the start of the survey, our approach to the evaluation and improvement of the quality of SOC data has evolved, resulting in significant improvements to data quality, coding practices and our engagement with the sector. We recognise the significance of this data for the sector, and we continue to make sure our quality assurance processes are robust and fit for purpose.

We are open to receiving feedback from providers on the accuracy of codes assigned to their own graduates. This is an optional exercise and should you choose to submit feedback we would like you to do so using the template provided.

Monday 8 January 2024 is the cut-off date for receiving any feedback to inform this year’s coding (C21072), to allow timely processing and dissemination of data. Any feedback received after this date will be used to inform the following year’s coding process.

The sections below outline more detail about the approach to SOC coding for year five (21/22) of Graduate Outcomes. Please refer to each of the sections below to understand the process, considerations and principles behind this approach. You will also find the link to the SIC and SOC methodology in coding methodology.

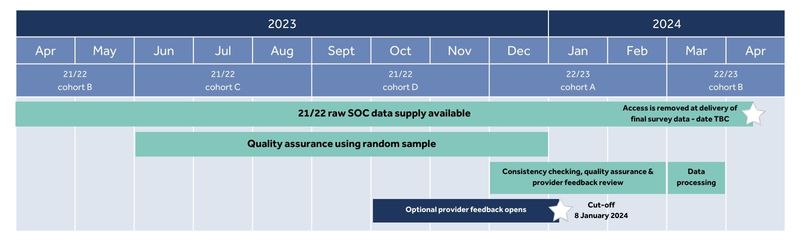

Below is an overview of the process and indicative timelines (all timings are subject to change). Please read the sections below to understand the key principles that have been incorporated into this process for year five. Further detail on timings regarding data dissemination will be provided in 2024.

Oblong (our coding supplier) has provided an outline of the coding methodology to provide visibility of the current process and to enable providers to feedback any questions or concerns, allowing us to continually improve. You’ll find this in the Graduate Outcomes methodology statement part two linked below.

View SIC / SOC coding methodology

Data quality is a fundamental part of the SOC coding process and we have worked extensively to create a robust and future-proofed approach. There are two components of our quality assurance plan, each aimed at a different aspect of data quality.

Identification of systemic coding issues using a random sample of records

A sample of records will be selected at random from each cohort and checked for accuracy and consistency by HESA. This activity will be carried out throughout the year and feedback will be shared with the supplier in a timely manner. It will make the quality assurance and data amendment process more comprehensive and manageable.

As part of this assessment we will identify any systemic issues which are defined as errors in coding, at an occupation level, which affect at least five records and at least 10% of the sample in that occupation group.

We do not aim for 100% administrative accuracy as this would be prohibitive both in terms of cost and resource usage. Rather, we aim for ensuring that the data is robust, high-quality, and fit for purpose. Where there are one-off individual coding issues, these are likely to be randomly spread throughout the survey responses.

Identifying non-random anomalies in the distribution of coded data

A series of checks by HESA will focus on the distribution of coded data across providers and subject groups. In particular, the following checks will be carried out on the entire coded dataset and at suitable intervals:

-

Compare distribution of records by SOC major group and subject across multiple years of Graduate Outcomes.

-

Identify and investigate significant anomalies, for example, uncodable records, coding variations within a job title, unexpected trends by subject.

It is possible for these differences to occur naturally and not due to errors in coding. Therefore, the presence of such differences is not in itself a cause for concern.

HESA is open to feedback where providers believe one or more records have been coded incorrectly. However, following an assessment by HESA and Oblong, changes to coding will only be made where they are deemed widespread or systemic. Queries raised by providers will be assessed under the same principles as outlined under Considerations - quality assurance.

Monday 8 January 2024 is the cut-off date for receiving any feedback to inform this year’s coding (C21072), to allow timely processing and dissemination of data. Any feedback supplied to HESA after this date cannot be incorporated into 21/22 outputs. However, we remain open to this feedback to allow us to fully inform the coding of year six (22/23).

Feedback must only be submitted using the template provided below. To ensure we can prioritise data quality assurance and provide timely outputs to the sector, instead of responding to individual feedback submissions, we will share a sector wide update in 2024 with a summary of the outcomes of this year’s quality assurance activities.

Provider feedback template (xlsx)

Providers may see some changes to the raw data in the provider portal, but it is not final until the data delivery in spring 2024.

Here you will find the summary reports from each survey year. These reports provide an overview of the overall SOC coding and quality assurance process, including the outcomes from the HE provider feedback process.

Year four – C20072

Graduate Outcomes - a summary of the year four SOC coding assurance

Year three - C19072

Graduate Outcomes - a summary of the year three SOC coding assurance

Year two - C18072

Graduate Outcomes - a summary of the year two SOC coding assurance

Outcomes of independent assessment by the Office for National Statistics are available here:

Graduate Outcomes SOC coding - Independent Verification Analysis Report